🔍 The Challenge

Single-Molecule Localization Microscopy (SMLM) achieves nanometric resolution by isolating light emitters over time. However, increasing acquisition speed requires raising emitter density — which rapidly leads to overlapping blinks that traditional algorithms cannot resolve. Abbelight needed a model that could detect and count emitters even under these high-density conditions.

- ⚡ Need to accelerate image acquisition

- 🔬 Emitters overlap at high densities

- 🧩 Classical algorithms fail in dense regimes

- 🧠 Deep learning offers a scalable alternative

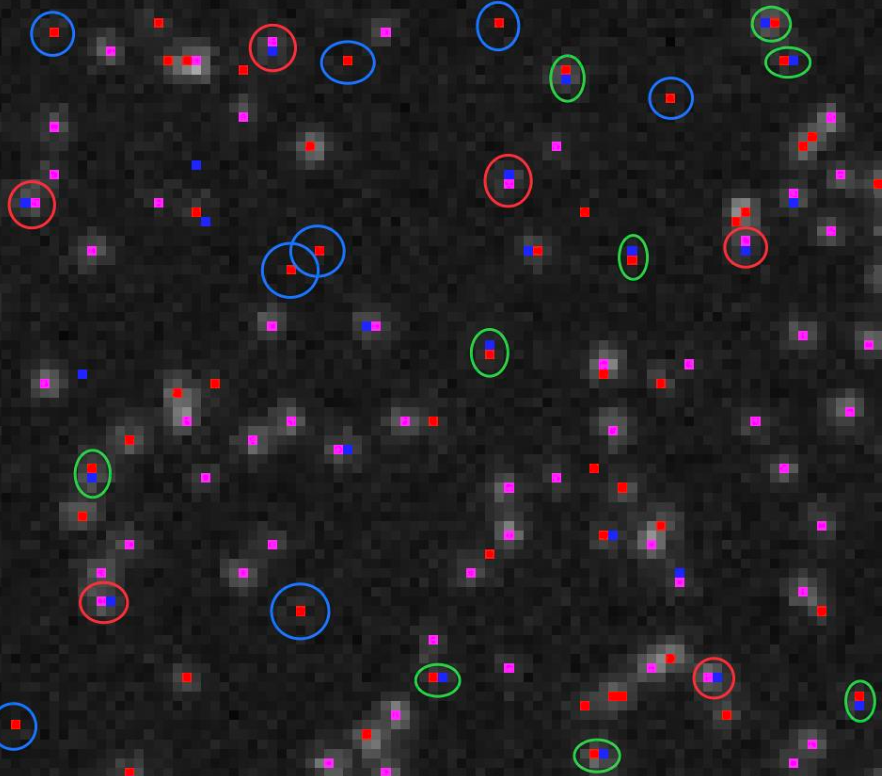

Red are missed truth, blue are false positives and purple are correct predictions.

💡 The HD-SMLM Approach

We use a 3D-UNet tailored for SMLM movies: a projection layer → encoder

(down-blocks with residual

convs + Swish) → bottleneck → decoder (up-blocks with skip connections). The temporal axis (e.g.,

3–5 frames) is kept

intact so the network learns spatio-temporal blink patterns. The final 1×1×1 conv produces

a

4-channel probability map for per-pixel counts: 0/1/2/3+.

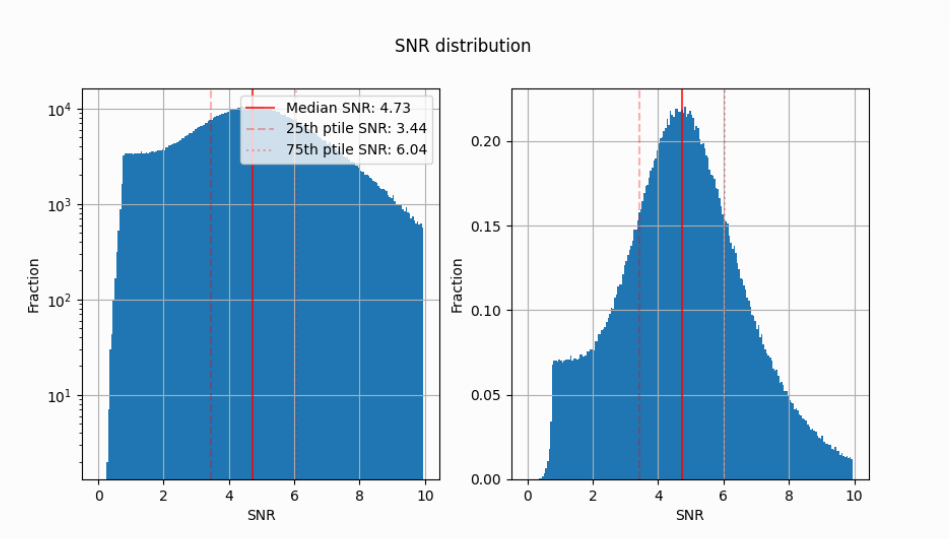

The Signal-to-Noise Ratio (SNR) measures how strong the emitter’s signal is compared to background noise. Low-SNR events are nearly indistinguishable from background, while extremely high-SNR events can dominate the loss and bias learning. To ensure consistent and balanced training, we clip SNR values above 2 — meaning all signals with SNR ≥ 2 are treated as reliable, while weaker ones are excluded from learning. This choice filters out ambiguous detections, stabilizes training, and focuses the model on physically meaningful emitter events.

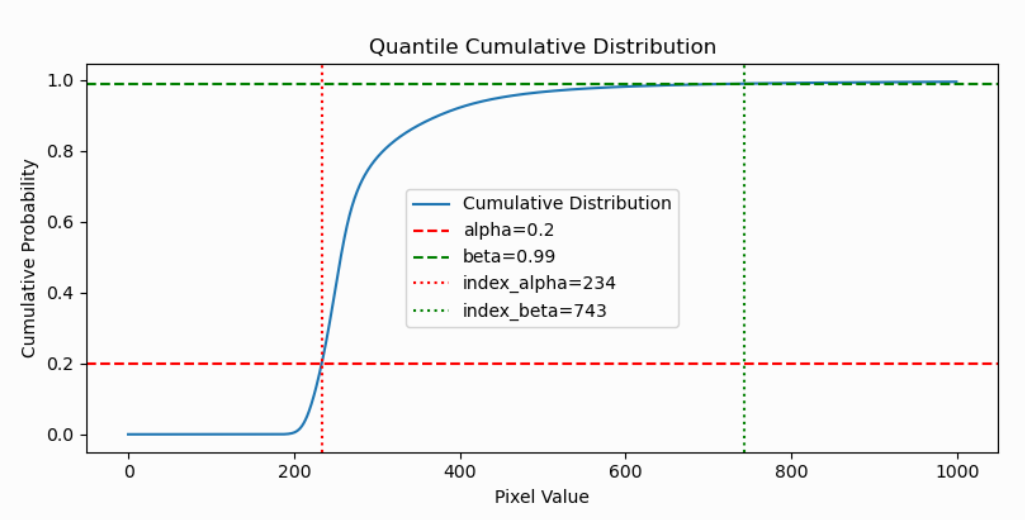

Separately, we apply intensity clipping for normalization on raw 16-bit TIFF inputs, typically between the 20th and 99th percentiles of the pixel distribution. This removes extreme background noise and saturation peaks while keeping the relevant emitter information. After clipping, intensities are normalized (e.g., scaled to −1…1), which improves gradient flow, speeds up convergence, and allows the same model to handle data from multiple microscopes and illumination conditions.

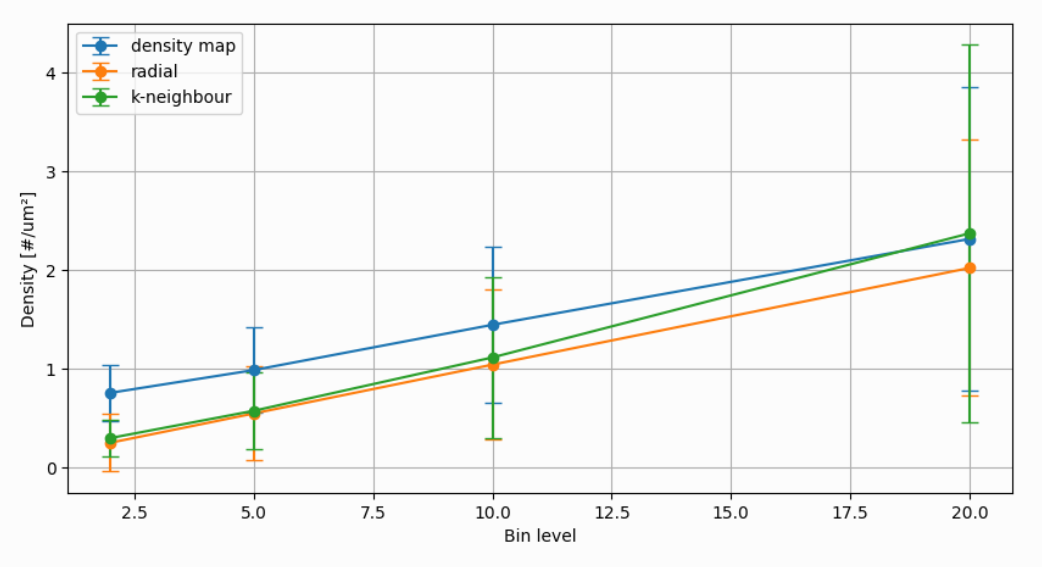

Traditional pixel-exact metrics are too rigid for microscopy, where emitters may be predicted one pixel away from their ground truth due to diffraction, sampling, or reconstruction variance. We therefore designed a custom k-nearest-neighbor (kNN) metric system. For each predicted emitter, we look for the k closest ground-truth emitters (usually k = 8). If a match is found within this neighborhood, it counts as a true prediction. This makes evaluation more physically realistic, tolerating natural spatial variance while still penalizing false or missing detections.

These design choices — SNR curation, normalization clipping, and kNN-based evaluation — make HD-SMLM robust to optical noise, dense emitter overlap, and real-world acquisition variability. The final probability maps serve as priors for Abbelight’s multi-emitter fitting algorithms, combining AI inference with precise optical modeling.

⚙️ Technical Implementation

- Architecture: 3D-UNet with residual blocks and Swish activation

- Loss: Hybrid Tversky

- Data: Simulated + experimental

- Training: Keras 3.0 with MLflow experiment tracking

- Optimization: Adam, WarmupCosineStepLR learning rate scheduler

- Hyperparameter exploration: Trained for all combinations from designated search space

- Deployment: Docker container (Linux & Windows) + custom GPU-balancer for multi-GPU training

📊 Results

The best model, Wolf, achieved excellent accuracy on simulated datasets (recall 0.92 / precision 0.84 / F1 ≈ 0.86). Real biological data (bin5/bin10) revealed challenges linked to noise, blinking variability, and label uncertainty — confirming the model’s sensitivity to true emitters even when unseen during training.

- 🏆 F1 = 0.87 on simulated data

- 🎯 Recall > 0.9 on Tier 1 targets

- 🔬 Robust detection at multiple densities

- 📈 Scalable UNet architecture with variable input size

A second model, Pandas, jointly trained on real + synthetic data, confirmed the ability to generalize but highlighted the need for domain-specific fine-tuning.

🧠 My Role

I co-led the design and development of the HD-SMLM deep learning pipeline at BionomeeX, collaborating directly with Abbelight’s R&D team to ensure full integration with their imaging stack. My work encompassed both algorithmic design and system engineering.

- 🧬 Built and optimized the 3D-UNet architecture (Keras)

- ⚙️ Worked on the data-generation and decorrelated temporal binning pipelines

- 📊 Managed MLflow experiment tracking and metrics evaluation

- 🧩 Implemented GPU balancer and Dockerized training environment

- 🧠 Tuned and explored hyperparameters for precision/recall optimization

- 🤝 Collaborated closely with Abbelight researchers for validation and deployment

Beyond coding, I strengthened my expertise in scientific AI modeling, resource orchestration, and interdisciplinary collaboration — bridging optical physics, deep learning, and large-scale data engineering.

Key technologies mastered: Python, Keras 3, MLflow, NumPy, FastAPI, Docker, GPU Balancer, and scientific image processing (3D TIFF 32-bit).